So far, drug development has focused on rare events within a dataset and making educated guesses to design the right drugs to target and bind to the proteins that cause disease. This is called the “better than The Beatles” problem, where new drugs have modest improvements on already successful therapeutics. As humans live longer, they are encountering, in ever greater numbers, new diseases that are complex and untreatable. There are a number of different technologies in advanced computing emerging that can solve some of the problems AI is currently tackling.įor example, quantum computing is superior to AI in drug discovery. With it, we can slow the creep of climate change. In short, advanced computing is the most effective tool we have to temper AI’s carbon addiction. The good news is that we already have advanced computing technologies that are primed to execute these tasks more efficiently and quickly than AI, with the added benefit of using much, much less energy. So, what do we do? Tempering the carbon addictionĪs always, technology will drag us out of this predicament.įor the explosion of AI to be sustainable, advanced computing must come to the fore and do the heavy lifting for many tasks that are currently performed by AI. We need a solution that allows AI to flourish while arresting its carbon footprint. However, AI’s popularity and its exponential power undermine much of the climate action in force today and call into question its potential to be part of the solution. Her latest work shows a 24-fold reduction in carbon emissions compared with GPT-3.

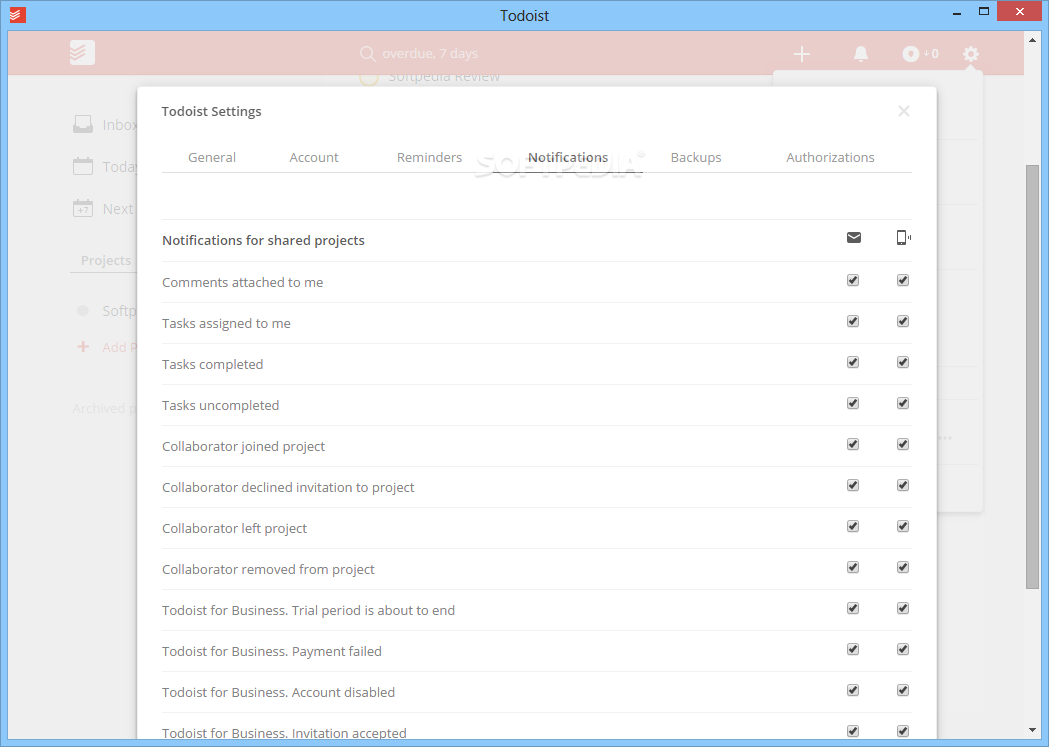

#Todoist open source alternative software#

Geeta Chauhan, an AI engineer at Meta, is using open-source software to reduce the operational carbon footprint of LLMs. GPT-4 arrived in March 2023, nearly four months after ChatGPT (powered by GPT-3.5) was released at the end of November 2022.įor balance, we shouldn’t assume that as new models and companies emerge in the space AI’s carbon footprint will continue growing. All the while, larger models are being released quicker. GPT-3 was 100 times larger than its predecessor GPT, and GPT-4 was ten times the size of GPT-3. homes use annually.Īs we move into the GPT4 era and the models get larger, the energy needed to train them grows. That’s the amount of electricity 120 U.S.

This expansion brings opportunities to solve major real-world problems in everything from security and medicine to hunger and farming. For example, the power required to train the largest AI models doubled roughly every 3.4 months, increasing 300,000 times between 20. The power that AI uses has been increasing for years now.

#Todoist open source alternative code#

That’s a lot of people using AI as ubiquitously as the internet, from using ChatGPT to craft emails and write code to using text-to-image platforms to make art.

AI will contribute as much as $15.7 trillion to the global economy by 2030, which is greater than the GDP of Japan, Germany, India and the UK.

0 kommentar(er)

0 kommentar(er)